Learn

Meet your match.

A pick-and-place for rapid prototyping

Welcome to our Part IV Project!

The intent for this page is to serve as a one-stop-shop towards understanding the operating principles and features of our pick-and-place machine.

Whether you're looking to hone your skills—or simply after an in-depth look at our machine—we do hope that you'll find this page to hit just the right spot.

James Bao and Sam Skinner,

Electrical and Computer Engineering @ The University of Auckland.

Application Interface

Constituent Pages

Machine Architecture

Open- and Closed-Loop Control

We will first define the objective, and what is meant by ‘an open-loop machine designed with provisions for closed-loop active assistance’.

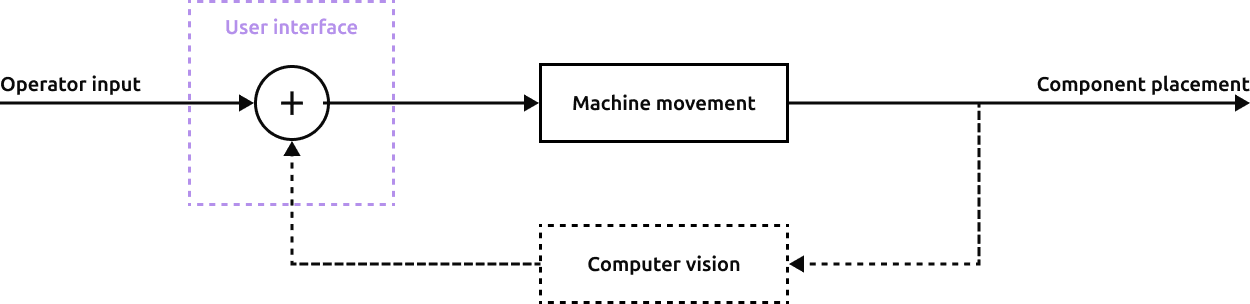

The proposed real-time pick-and-place machine is modelled as a control systems block diagram as shown below. The operator's input is received by the machine through the input device, which is treated initially as a black box. This input is fed through the user interface to be interpreted, resulting in a movement of the - machine gantry and the mounted vacuum head. This represents the open-loop control path of the machine.

It is proposed that computer vision (CV) ‘feedback’ can be introduced to this open-loop control path to achieve enhanced machine performance. This CV would provide active alignment assistance to the human operator, offloading the burden of fine positioning to the machine. In such an operating profile, the operator would be solely responsible for rough position identification. Once component leads have been roughly associated with its corresponding component pads, active alignment assistance would correct any rotational error and perform final ‘wicking’ of the component into place.

Machine Modules

To achieve the desired closed-loop feedback, a mechanical system that provides two real-time video feeds is devised. One real-time video feed captures the PCB's pads, and another captures the picked component's leads. This real-time video is fed to a CV algorithm to identify the gantry translations and head rotations required to achieve wicking.

The experimental prototype comprises of three primary software modules and three supplementary electromechanical components, as diagrammed below. The machine software comprises of the machine controller, the user interface, and the CV routines.

| Module | Technologies | Description |

|---|---|---|

p4p-83/gantry | C C++ G-code | The low-level machine control of our - stepper motors and limit switches. |

p4p-83/vision | C++ Julia libcamera ffmpeg WebRTC | The machine vision that makes our pick-and-place intelligent. |

p4p-83/controller | C++ Julia Protobufs WebRTC WebSockets | The command & control that conducts the entire orchestra. |

p4p-83/interface | TypeScript Next.js React Tailwind CSS shadcn/ui Zod Protobufs | This web application; displays the real-time video feeds to the human operator, and receives & interprets their inputs. |

A single 4 GB Raspberry Pi 5 is used to run all three software modules, though the user interface may be served to any client browser that is networked to the Raspberry Pi. The user interface may also be run on an operator's own device, provided there is a network connection to the Raspberry Pi such that the real-time WebRTC video feed and WebSocket connections can be established to the machine controller.

In this section, we outline these modules, the role(s) that they each play, some technical details that pertain, and the interactions between them. The overarching focus is on these system behaviours and interactions—consequently, many of the following headings necessarily cross module boundaries.

Position with precision

Mastered the manual? Start placing!